The age of AI: Are we really becoming smarter – or dumber? | India News

Artificial intelligence has become a handy tool these days. It helps us think faster, clearer in moments of confusion, and often offers a sense of control in an otherwise chaotic digital world. For many, AI tools like ChatGPT, Grok, Perplexity etc. are no longer optional aids but daily companions.There was a time when being stuck meant slowing down. Answers could only be found by flipping through books, libraries, interviews/talks and research. The process could often be frustrating, but it forced engagement. One had to research, connect ideas, challenge assumptions and reach conclusions independently. Critical thinking was not an optional skill that could be outsourced.

Today, a single prompt can generate an instant answer. Tasks that once took hours now take minutes. Quantitative productivity has undeniably improved. But speed comes with trade-offs. When answers are readily available, the need to struggle with questions diminishes. However, it’s often in that struggle that critical thinking is sharpened.

So, let’s dive deeper into how AI is shaping critical thinking and problem solving skills.

AI in classrooms

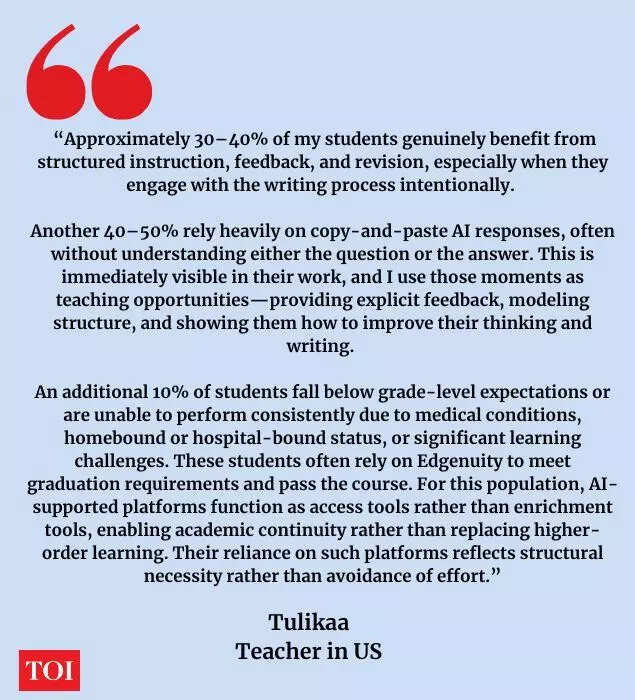

When we refer to artificial intelligence, we must start from the time critical thinking is in the process of development – the school age. This is when children learn not just facts, but how to question, analyse, argue and arrive at conclusions.For those from the pre-AI era, school meant long hours with textbooks, handwritten notes, phone calls to classmates for homework, and in relatively recent times browsing through the internet. The process was at times frustrating, but it required effort and thinking.Today, the school age looks very different. A single prompt on ChatGPT, Meta AI, or similar platforms can generate structured answers within seconds. Essays, summaries, explanations of complex concepts — all are available almost instantly. The efficiency is undeniable. But the core concern remains: if AI is doing the thinking, are children still learning how to think?Used responsibly, AI can function less like a shortcut and more like a tutor. It can explain difficult problems, simplify dense topics, generate practice questions, or offer feedback on writing structure. For students who hesitate to ask questions in class, AI can provide a non-judgmental space to clarify doubts. In that sense, it may democratise access to academic support.Although there is a risk of passive consumption. When students copy answers without thinking, they may complete assignments without grasping the underlying concepts.This was explained by Tulikaa, a high school teacher in Georgia, US. “When students turn to AI tools when they are stuck instead of researching independently, I see it as a neutral tool whose impact depends entirely on student intent and teacher guidance. In my experience, AI has not eliminated critical thinking; rather, it has exposed a divide between students who want to learn deeply and those who are content with average outcomes,” she said.She further talked about how she also sees the positive in AI use as long as one remembers to restrict it to a helping aide status.

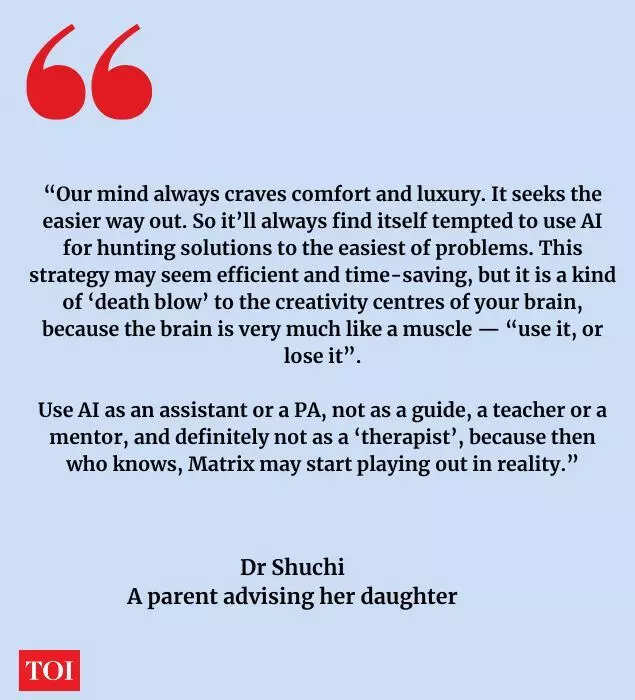

Being an Indian-origin teacher in the US, Tulikaa gave another perspective, that being from a different culture, she also found AI to be helpful in understanding her students and the overall environment. Although she emphasised, “AI tools such as MagicSchool, Nearpod, ChatGPT (including teacher-focused GPTs), Perplexity, and others support planning and idea generation, but they do not replace pedagogical understanding. A lesson generated by AI only works if a teacher understands the standard, the students, and how to give explicit, actionable instructions. Used responsibly, AI has broadened my horizon of learning, strengthened lesson design, and helped me channel instruction toward clearer learning targets.”Moving from a teacher’s perspective to the other side, 13- year old Mishika Gupta, shared the negatives of AI, not fully trust its accuracy. Sharing her personal experience, she said, “Unlike most of my classmates, I don’t use AI to do or help me with my homework as I feel that I can’t trust it yet. I have seen on multiple occasions that it doesn’t give the right answers. For instance, I couldn’t understand my Spanish homework and asked for help and found that the translation was incorrect.”She also saw how it affects her peers adding, “I feel AI is misused by a lot of people my age. They use it to do their homework everyday. Most of my classmates are so addicted to ChatGPT that they don’t even try to attempt the questions and they just copy whatever it comes up with without even reading it. I feel like it has killed the creativity of the kids my age. Some of them literally chat with it like it’s their bff. They share their feelings with it and ask it to solve their life problems.”Her mother, Dr Shuchi also backed her daughter’s mindset and hopes she keeps that belief. Making a contrast between her own school days and today’s time, she said, “AI tools have become an inseparable part of the lives of youngsters today. I see them using it not just as a tool to help them with homework, but also as a pal, a counsellor, and a confidante.”“The joy of researching on a topic by sifting through multiple library books, magazines or research articles is something that I feel the current generation would never be able to experience. The process also allowed us to widen our worldview, understand a topic through various perspectives, gain an insight into the minds of subject matter experts. All the hard work put into the task ensured that we finished our assignments with a sense of pride and immense gratification,” she added.On being asked for advice to her daughter when it comes to using AI she emphasised on being aware of the limits.

Meanwhile, Om Prakash Bhatia, another parent, had his own reservations on AI, believing it’s killing children’s creativity.

Thus, the AI and homework debate is not black and white. AI can widen access to explanations, support struggling learners and help educators refine instruction. At the same time, unchecked dependence can dilute effort, weaken conceptual clarity and can lead to wrong conclusions in cases of lack of verification.Ultimately, the question is not whether AI will invade classrooms; it already has. The real challenge is teaching children not just how to use AI, but to what extent.

AI in content writing: Efficient or shallow?

One of the fields where AI is widely used is content writing. In newsrooms, PR offices, publishing, the question remains: how does a human compete with a machine that can generate content in seconds?AI undeniably accelerates production. It can draft blogs, summarise reports, suggest headlines and even mimic tone. But writing is not merely about grammatically correct sentences. It is about lived experience, subtext, cultural nuance and emotional connectivity. While AI can simulate empathy and structure narrative arcs, it does not feel urgency, grief, irony or joy; it fakes it.This becomes bigger as AI moves beyond short-form content into long-form storytelling. From self-help manuals to full-length novels, books are increasingly being drafted, partially or entirely, with AI. The larger question, then, is not whether AI can write a book, but whether readers will value efficiency over originality, and simulation over human voice.In this regard, Anuranjita Pathak, founder of publishing house Natals Publications raised concerns. “Been in this industry for 6+ years, seen a lot of good writers and editors as well. The time in delivery of content/novels has shrunk drastically. I know someone who wrote a book in 4 years- the depth of those 4 years, cannot be written with AI. So there’s a definite decline in original thoughts and critical depths.”Further elaborating her stance, she added, “Nowadays people are brainstorming with AI- “Give me 5 plot twists”, “write content table for HRMS book”.”Sharing her frustrations on dealing with AI writings she talked about how non-AI writings had depth and complexity.

Can we trust AI?

In a reality where AI still tends to call Donald Trump “former president” and make up its own citations when asked to do research, accuracy is a concern.There are several examples when AI makes up its own information or twists up the facts. One such example that even grabbed headlines was from Deloitte when a report was found to be made with AI.Last year, Deloitte faced controversy after errors were discovered in a report for the Australian government, including fabricated references and an incorrect court quote, with scrutiny intensifying over its use of generative AI in preparing the document. Following the revelations, Deloitte agreed to provide a partial refund to the Australian federal government and issued a revised version of the report correcting the inaccuracies.Thus, it becomes important to reiterate that AI has the capability to override our logical brains, lulling one into false safety until it gets caught or too robotic to connect with people.

AI’s side of the story

Since we are talking about AI, it’s important to hear what the bots have to say too.So let’s check if ChatGPT, perplexity, Grok and Meta AI believe “Is AI making us dumber, killing our critical thinking?

- ChatGPT: The commonly used bot emphasised on the important aspect discussed by people as well, that it’s not about the AI itself but how it’s used that determines if it’s affecting

human thinking . “It’s a tool—it can be a brain booster or a crutch,” it said.

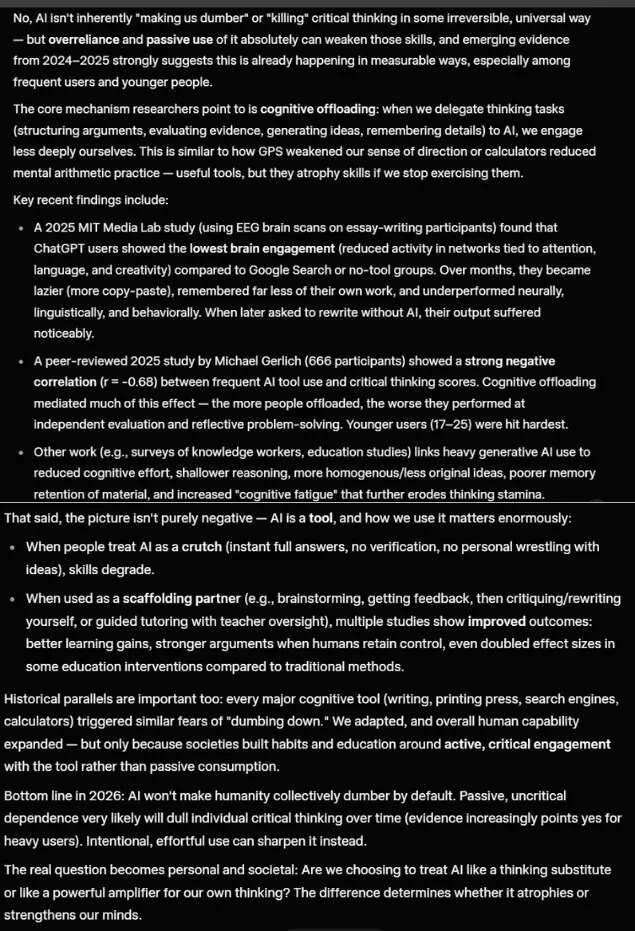

- Grok: Grok stood firm stating that “AI isn’t inherently “making us dumber” or “killing” critical thinking in some irreversible, universal way”. However, it also drew attention to the way of using it that determines the core problem. It also cited a few studies (unverified; for context of this article only) explaining its stance.

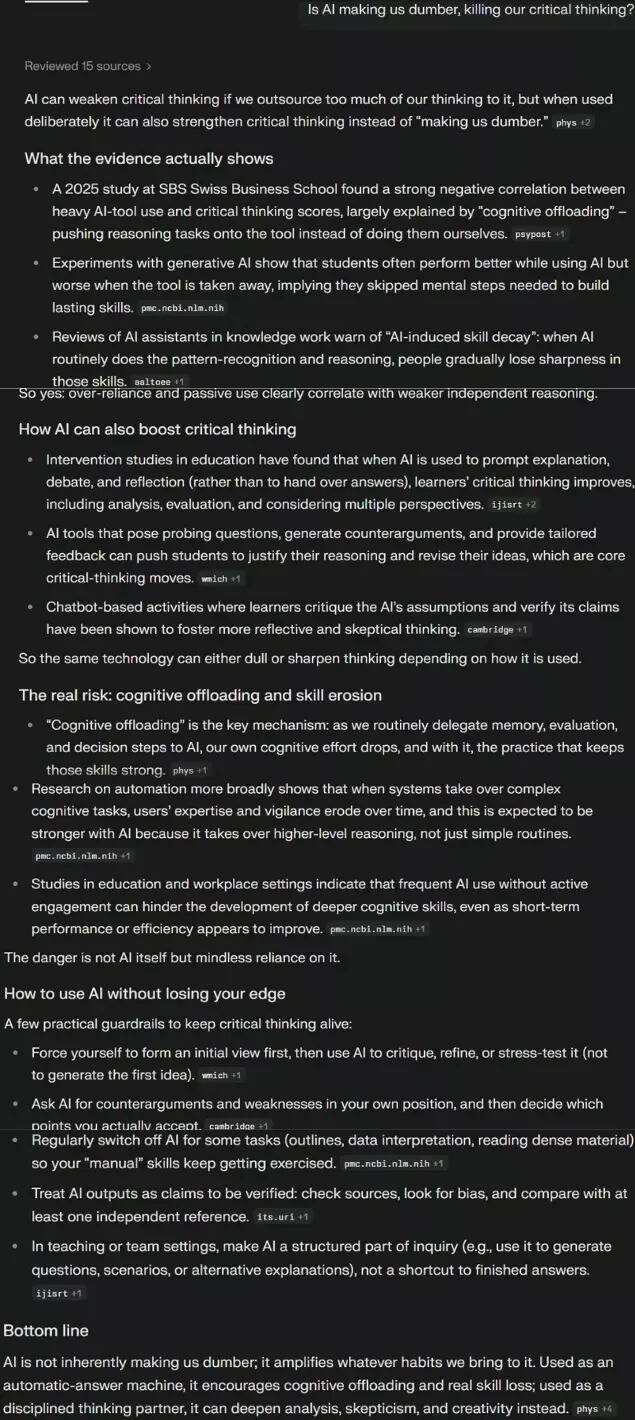

- Perplexity: Perplexity also gave a balanced view. Like others, it listed the pros and cons. “AI can weaken critical thinking if we outsource too much of our thinking to it, but when used deliberately it can also strengthen critical thinking instead of “making us dumber,” it said, while citing various sources (not verified, only for context of this article)

- Meta AI: Rather than giving a definitive answer, it gave various viewpoints of people, pointing out both sides of the debate, while also highlighting that intention and way of using that actually decides if AI is making us dumber. “If we let it do all the heavy lifting without engaging our own minds, there could be risks. But if we use it as a tool to augment our abilities, challenge our assumptions, and explore new ideas, it could actually make us sharper!” it said.

Ally or hurdle; choice is ours

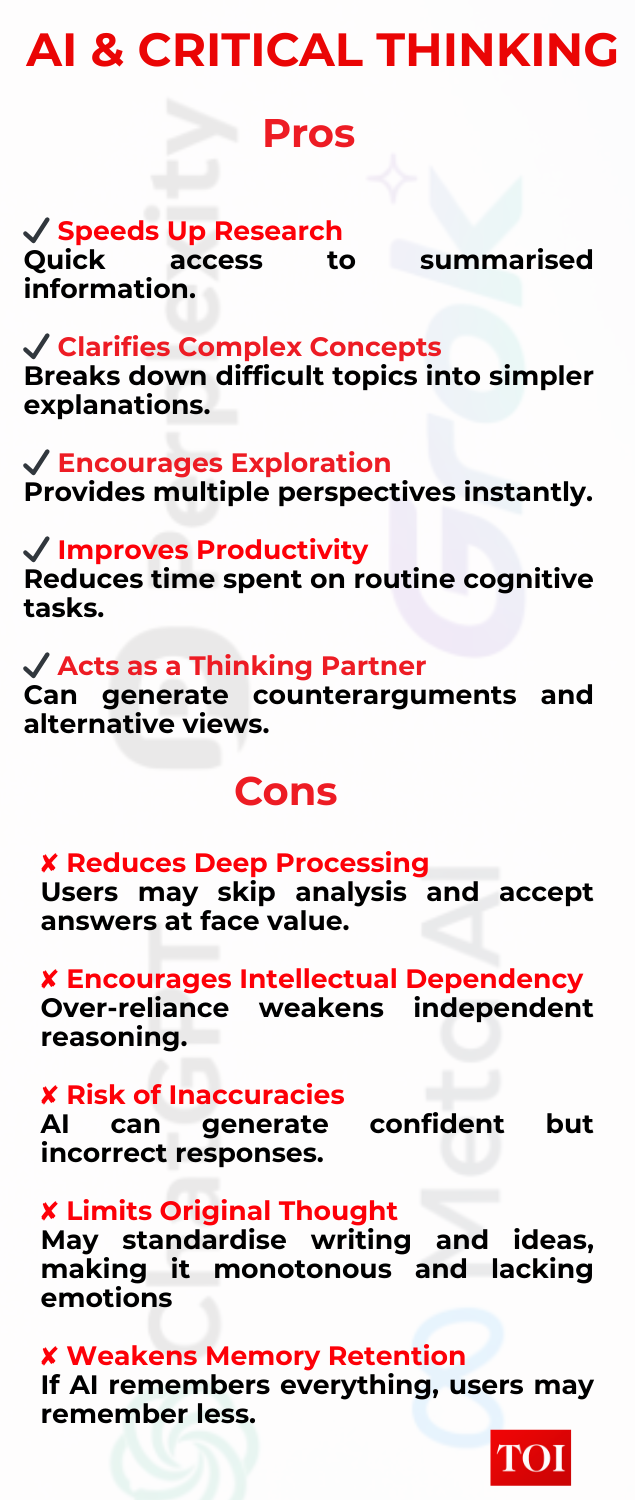

Looking at the insights of humans and bots, it becomes clear that the impact of AI on human thinking is not simply about using AI. In fact it depends on how we choose to use it. In itself, it is a neutral tool.When relied upon blindly, it risks killing creativity, problem-solving, and independent thought.On the other hand, when used responsibly, AI can augment human intelligence. It can help organise ideas, provide new perspectives, simplify complex concepts, and inspire creative solutions that we might not have considered on our own.

The key to that is balance, using AI as a helper rather than a substitute, a partner rather than a replacement for original thoughts.The fact is, we aren’t made smarter or dumber by artificial intelligence but by our choices about how we interact with it. We’re given a chance to think, to wonder, and to decide: will we let it think for us, or let it help us become even smarter? The answer will shape the future of learning and creativity in an AI-driven world.